Click on image for full size

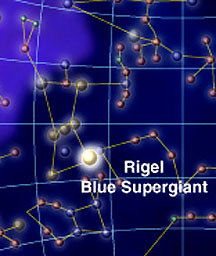

Original Windows to the Universe artwork by Randy Russell, using a simulated view of Orion generated by Dennis Ward.

Magnitude - a measure of brightness

Astronomers use the term "magnitude" to describe the brightness of an object. The magnitude scale for stars was invented by the ancient Greeks, possibly by Hipparchus around 150 B.C. The Greeks grouped the stars they could see into six brightness categories. The brightest stars were called magnitude 1 stars, while the dimmest were put in the magnitude 6 group. So, in the magnitude scale, lower numbers are associated with brighter stars.

Modern astronomers, using instruments to measure stellar brightnesses, have refined the system initially devised by the Greeks. They decided that five steps in magnitude should correspond to a brightness difference of a factor of 100. A magnitude 1 star is thus 100 times brighter than a magnitude 6 star. This means that a difference of 1 in magnitude means a factor of about 2.5 times brighter. A magnitude 3 star is 2.5 times brighter than a magnitude 4 star, while a magnitude 4 star is 2.5 times brighter than a magnitude 5 star.

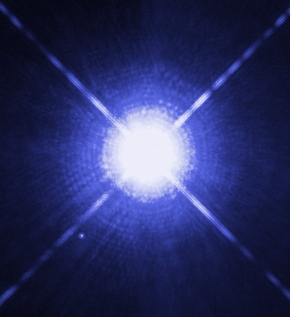

Exceptionally bright objects, like the Sun, and very dim objects, such as faint stars that can only be seen with telescopes, have driven astronomers to extend the magnitude scale beyond the values of 1 through 6 used by the Greeks. We now recognize magnitudes less than one (including negative numbers) and greater than six. Sirius, the brightest star in our nighttime sky, has a magnitude of minus 1.4. The largest modern ground-based telescopes can spot stars as dim as magnitude 25 or higher. The human eye, without the aid of a telescope, can see stars as faint as 6th or 7th magnitude.

Astronomers distinguish between the apparent magnitude and the absolute magnitude of a star. "Apparent magnitude" refers to how bright a star appears to us when we view it at night from Earth. However, some stars are much further from us than others, and thus appear much dimmer simply because they are far away. Astronomers wanted a way to describe the inherent brightnesses of stars, irrespective of their proximities to us. They came up with the absolute magnitude scale. If we could place all stars a fixed distance away, and then see how bright each star looked, we would know the "absolute magnitude" of each star. We can't actually move stars around, but we can calculate how bright a star would be if placed at the agreed-upon fixed distance of ten parsecs (about 32.6 light years). Our Sun, for example, would be an inconspicuous magnitude 4.83 star at this distance. The absolute magnitude scale allows astronomers to make "apples to apples" comparisons between stars, allowing the scientists to compare the intrinsic brightnesses of stars. Astronomers often use the letter "m" to denote apparent magnitude, and "M" to signify absolute magnitude.

Whether discussing absolute or apparent magnitude, if we want to compare the magnitudes and brightnesses of two stars, we can use the following equation:

m2 - m1 = 2.512 (log b1 - log b2) = 2.512 log (b1/b2)

... where m1 and m2 are the magnitudes of star 1 and star 2, and b1 and b2 are the brightnesses of the two stars.

For example, Polaris, the North Pole Star, has an apparent magnitude of 1.97. Since Sirius has an apparent magnitude of minus 1.4, Sirius is [1.97 - (-1.4)] = 3.37 magnitudes brighter than Polaris. Plugging into the equation:

1.97 - (-1.4) = 3.37 = 2.512 log (bSirius/bPolaris)

Solving for the brightnesses, we find that (bSirius/bPolaris) = 22. In other words, Sirius appears about 22 times brighter than Polaris.

As a second example, let's say we know that a star is 5 times brighter than Mizar (one of the stars in the Big Dipper), which has an apparent magnitude of 2.23. What is the magnitude of the brighter star? We start off with he fact that (bbright star/bMizar) = 5. We then plug into the equation:

mMizar - mbright star = 2.23 - mbright star = 2.512 log (b1/b2) = 2.512 log 5 = 1.76

mbright star = 2.23 - 1.76 = 0.47

When you look at the nighttime sky, you can see stars with magnitudes ranging between -1.4 (Sirius, the brightest) and around 6 or 7 (the limit for the "naked eye", without binoculars or a telescope). If some of the planets are out, you might be treated to bright Venus (magnitude -4.4 at its brightest) or red Mars (which can be as brilliant as magnitude -2.8). If the Full Moon is out, you can gaze upon an object with a magnitude of -12.6. Once the Sun rises, don't look directly at it; at magnitude -26.8 you'll damage your eyes if you do!

Pluto has a magnitude around 14, so you'll need a pretty big telescope to spot it; it is much, much dimmer than the 6th magnitude stars you can barely make out with your eyes. The world's largest ground-based telescopes can detect stars in the magnitude 25 to 27 range. The Hubble Space Telescope has been able to image stars with magnitudes around 30!